Young scientists research

Another researches of young scientists

|

|

Workload Management System for SPD Online Filter Greben N.V., Oleynik D.A., Romanychev L.R. SPD is an experimental facility designed for studying spin physics as part of the NICA megascience project at JINR. With an estimated interaction frequency of 3 MHz, the detector is expected to generate a data flux of around 20 GB/s, equivalent to 200 PB per year. There is no possibility of long-term storage and analysis of such a huge data volume, therefore, the initial data stream should be reduced to an acceptable size for particular physics research according to the physics program of the experiment. Since the experimental facility utilizes a triggerless data acquisition system, unscrambling events from the aggregated data stream before filtering them out becomes necessary due to the stream’s complex nature. SPD Online Filter will be a software and hardware complex for the high throughput processing of primary data, performing the partial reconstruction of events and selecting only those of interest in the current research. The hardware component will consist of a collection of multicore compute nodes, high-performance storage systems and a number of control servers. The software component will include not only applied software, but also a suite of middleware, SPD Online Filter – Visor, the role of which is to arrange and execute multi-stage data processing procedures. This poster summarizes the architecture and implementation of a prototype for the Workload Management System, one of the key components of SPD Online Filter. The Workload Management System comprises a server component responsible for controlling dataset processing by executing a sufficient number of jobs and an agent application that monitors and manages job execution on the compute node. |

|

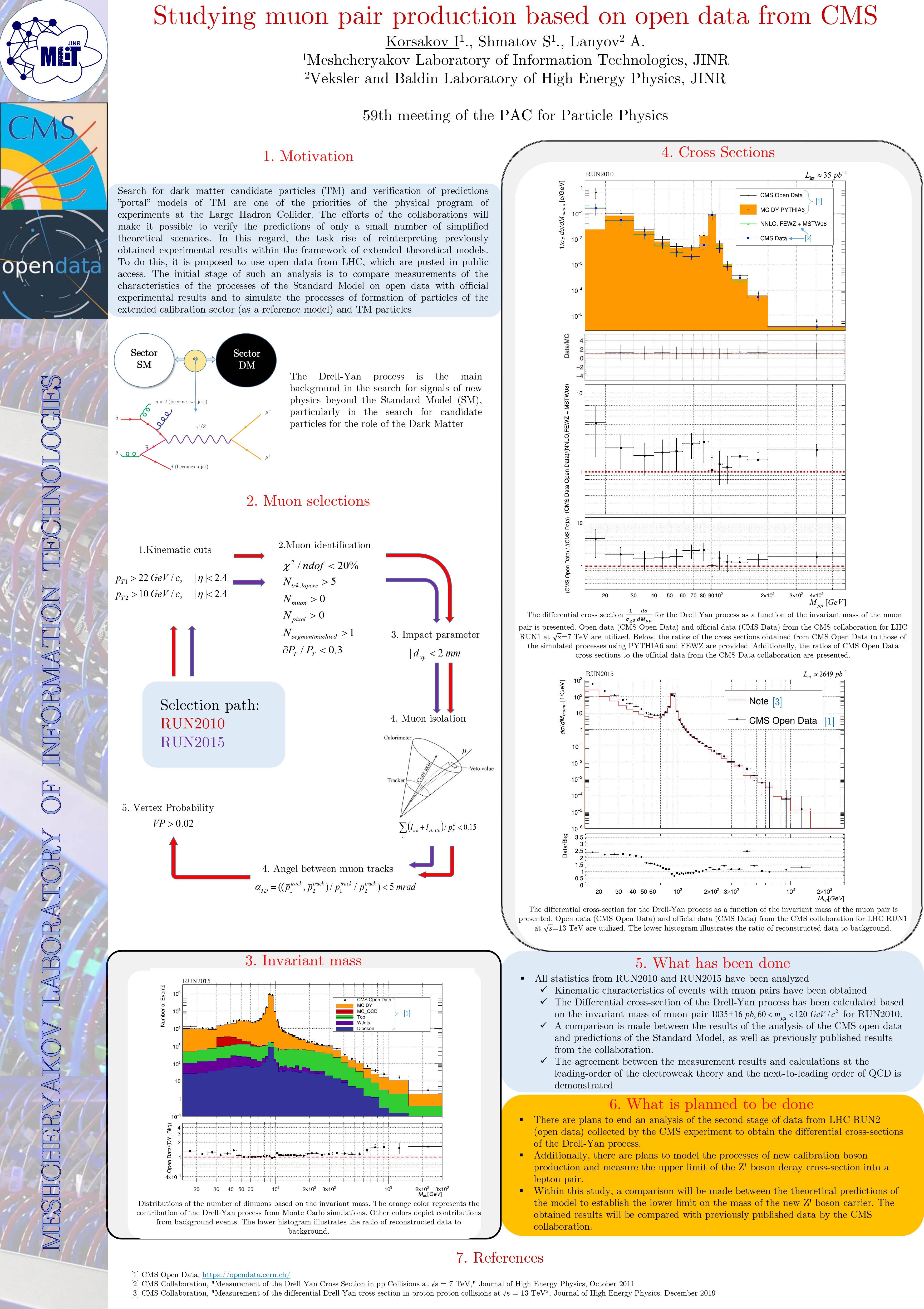

Studying muon pair production on open data from CMS Korsakov I., Shmatov S., Lanyov A. This paper presents the results of a study on the production of muon pairs in the Drell-Yan process from proton collisions at the LHC with a center-of-mass energy of 7 and 13 TeV. The work is based on open data from the CMS experiment, collected during the first and second runs of the LHC in 2010 and 2015. Differential cross-sections of the investigated process are obtained as a function of the invariant mass of muon pairs, and the kinematic distributions of muons and muon pairs are studied. A comparison is made between the results of the analysis of CMS open data and the predictions of the Standard Model, as well as previously published results from the collaboration. A partial agreement between the measurement results and calculations at the leading order of the electroweak theory and the next-to-leading order of QCD is demonstrated. The results of this work will be used in further searches for physics beyond the Standard Model, particularly in the search for candidate particles for dark matter. |

|

|

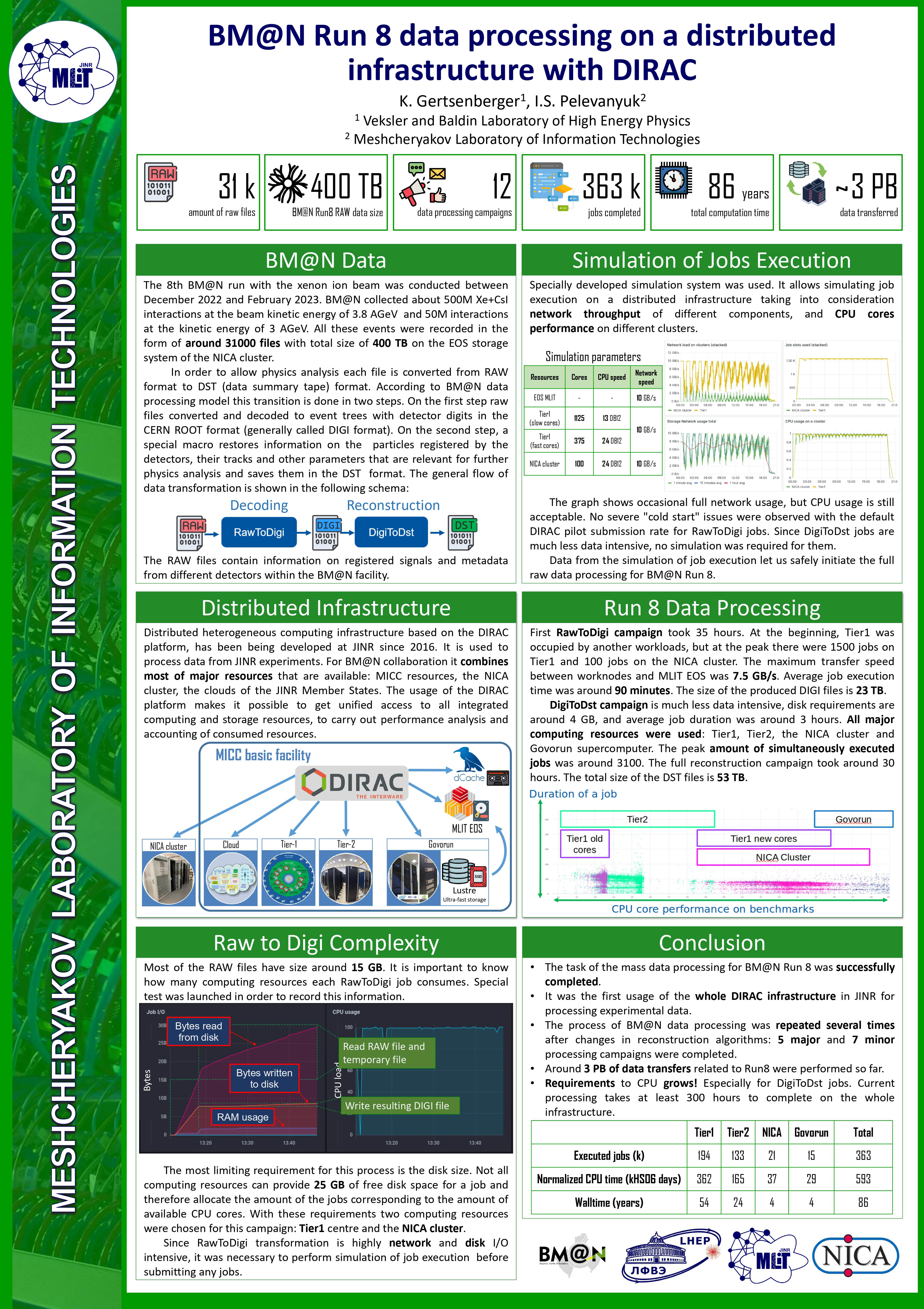

BM@N Run 8 data production on a distributed infrastructure with DIRAC K.Gertsenberger, I.S. Pelevanyuk The BM@N 8th physics run using xenon ion beams was successfully completed in February 2023, resulting in approximately 600 million events. They were recorded in around 31,000 files, with a combined size exceeding 400TB. To process all this data, the JINR DIRAC platform was chosen. Data processing consists of two steps: conversion from Raw to DIGI format and conversion from DIGI to DST. All available major computing resources were used to process the data: Tier1, Tier2, NICA cluster, the “Govorun” supercomputer. A new method was developed to perform large-scale data productions. All types of computing jobs were thoroughly reviewed to understand CPU, RAM and disk requirements. After that, the simulation of job execution was launched to find an appropriate distributed infrastructure configuration. Using this configuration, all jobs were submitted for execution. Once all jobs were completed, their CPU performance and network usage were studied. All the data was processed with this approach. The data processing of the BM@N 8th physics run was the first time that DIRAC was used for data reconstruction at JINR. A set of approaches, systems, and methods were developed during this campaign, they will aid in reducing the efforts required for future data reconstructions at JINR. |

|

|

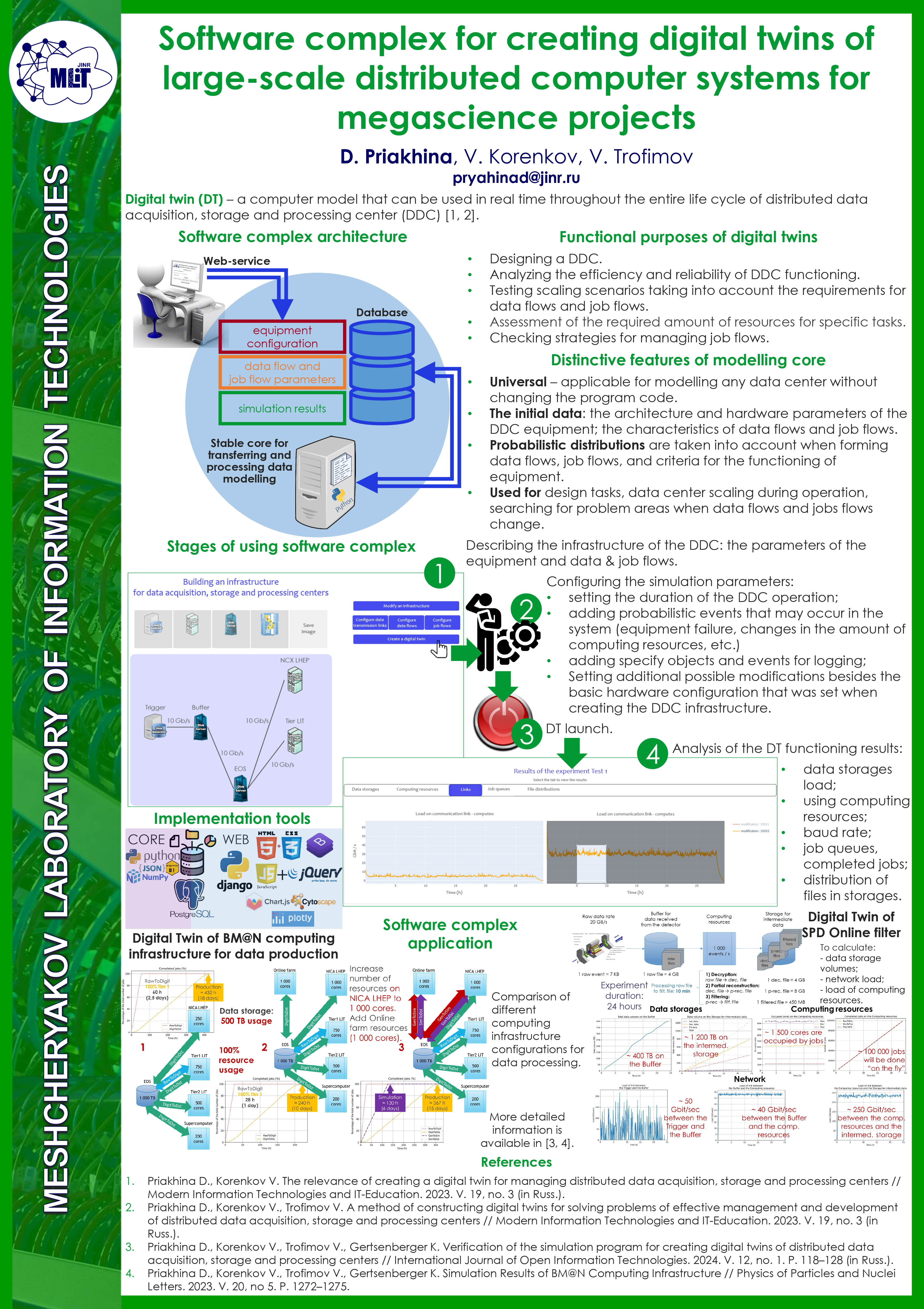

Software complex for creating digital twins of large-scale distributed computer systems for megascience projects D.Priakhina, V. Korenkov, V. Trofimov Modern scientific research and megascience experiments cannot exist without large-scale computing systems that enable to store large amounts of data and process them in a relatively short time. Such systems are distributed data acquisition, storage and processing centers. Large-scale distributed computer systems have a complex structure, include many different components and provide shared access to data storage and processing resources. A digital twin is needed for the design, support and development of distributed computer systems. It should allow one to investigate system reliability, check various scaling scenarios and find the necessary amount of resources to solve specific tasks. The Meshcheryakov Laboratory of Information Technologies (MLIT) of the Joint Institute for Nuclear Research (JINR) has developed a prototype of a software complex for creating digital twins of distributed data acquisition, storage and processing centers. Development usage examples for the computing infrastructures of the BM@N and SPD experiments of the NICA project are considered. The examples confirm the possibility of further use of the software complex in the design and modernization of various computing infrastructures for megascience projects |

|

|

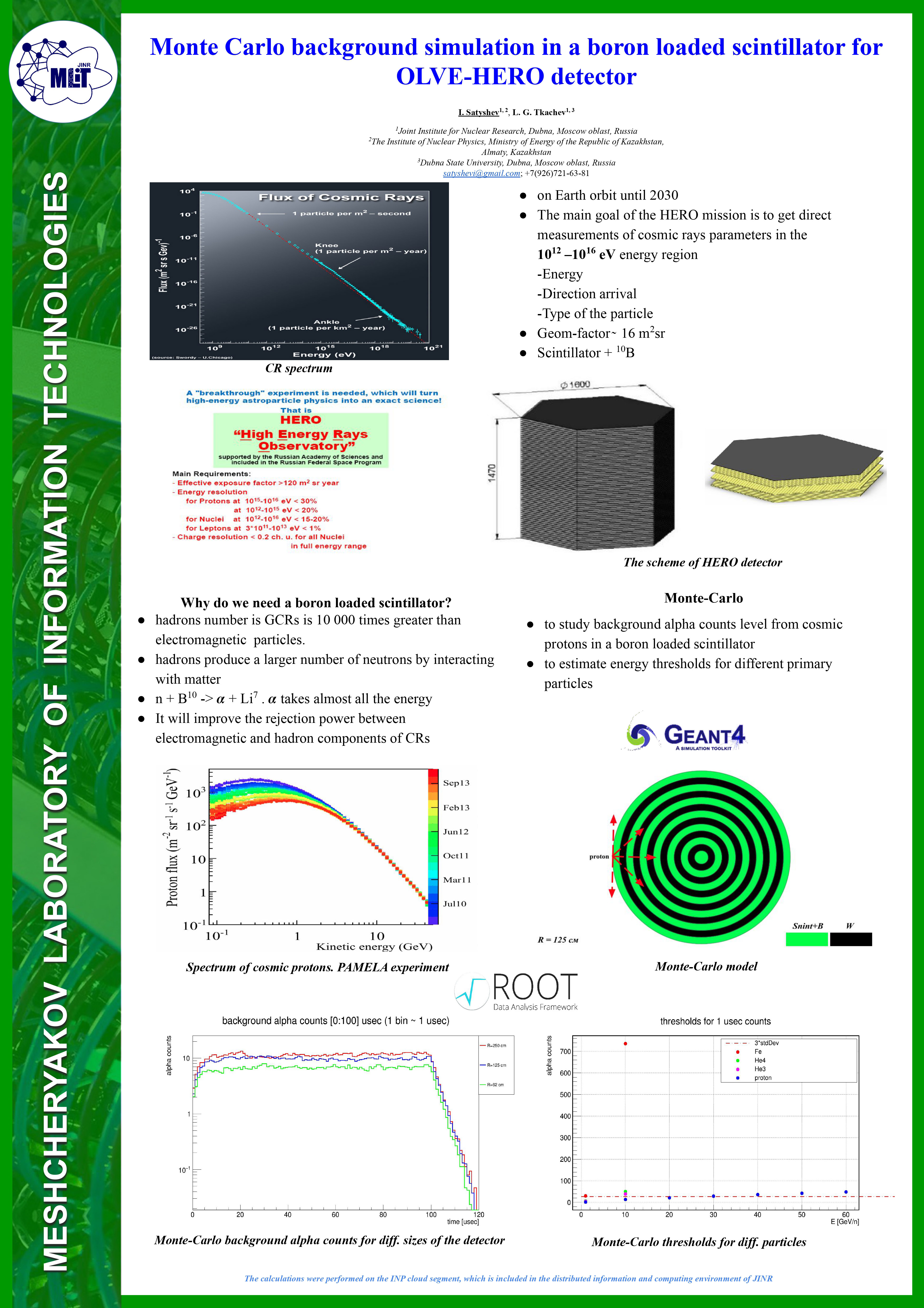

Monte Carlo background simulation in the boron loaded scintillator of the OLVEHERO detector I. Satyshev, L.G. Tkachev A project of the OLVE-HERO space detector for measuring cosmic rays in the range 1012– 1016 eV is proposed. It will include a large ionization-neutron 3D calorimeter with a high granularity and geometric factor of ∼16 m2 sr. The OLVE-HERO main detector is expected to be an image calorimeter with a boron loaded plastic scintillator and a tungsten absorber. Such a calorimeter can measure an additional neutron signal, which should improve the detector energy resolution, as well as the rejection power between the electromagnetic and nuclear components of cosmic rays. The Monte-Carlo results of a simplified version of the detector from the cosmic proton flux are presented. The purpose of this work is to study the background level that occurs during the formation of evaporation neutrons in the detector, their slowing down to thermal energies, followed by capture by B-10 nuclei and the production of ɑ-particles with an energy of ~2 MeV |

|

|

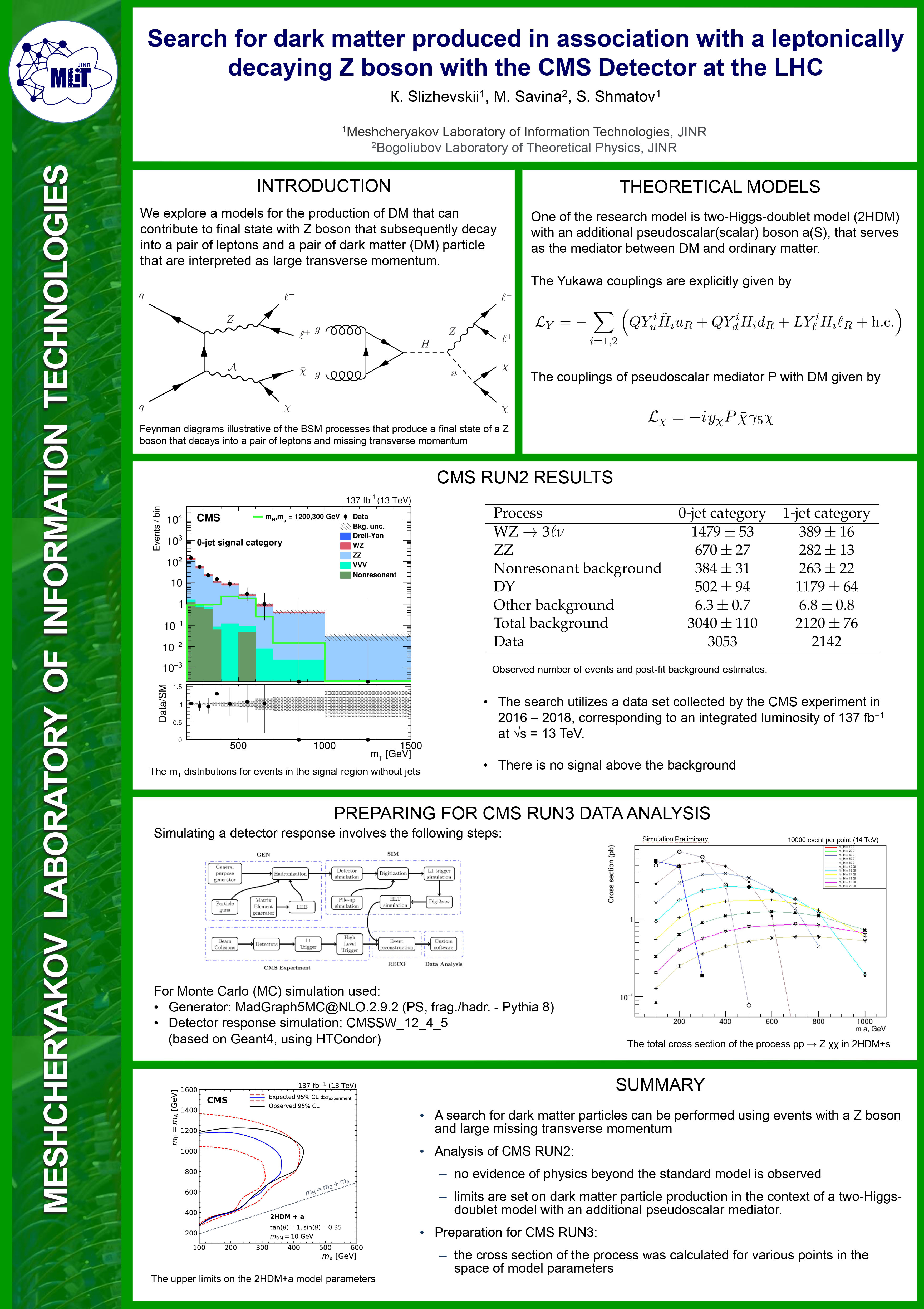

Search for dark matter produced in association with a leptonically decaying Z boson with the CMS Detector at the LHC K. Slizhevskii, M. Savina, S. Shmatov A search for dark matter particles is performed using events with a Z boson candidate and large missing transverse momentum. The analysis is based on data at a center-of-mass energy of 13 TeV, collected by the CMS experiment at the LHC, corresponding to an integrated luminosity of 137 fb-1 (LHC RUN2). The search uses the decay channels Z → ee and Z → µµ. The results are interpreted in the context of simplified models with vector, axial-vector, scalar, and pseudoscalar mediators, as well as on a two-Higgs-doublet model with an additional pseudoscalar mediator (2HDM+S). The results are also presented for studies of LHC performance to observe a hypothetical particles predicted by the 2HDM+S/a models. This analysis has been performed with Monte Carlo data, produced at a center-of-mass energy of 13.6 TeV (LHC RUN3) and an integrated luminosity of up to 300 fb-1. |